Retro Computing (on Steroids)

Retro Computing (on Steroids)

Home › Forums › Related Hobbies including Vehicle Restoration › Retro Computing (on Steroids)

- This topic has 83 replies, 24 voices, and was last updated 10 July 2023 at 09:24 by

Gerard O’Toole.

-

AuthorPosts

-

16 December 2020 at 21:51 #513897

Frances IoMParticipant@francesiom58905

Frances IoMParticipant@francesiom58905Dave – simplest is a fixed size array, this will however limit the maximum number of digits in any derived number but an array of bytes will suffice as long as there is a check that the maximum is not exceeded.

Personally (I guess I ought to code it but don’t have any C compiler set up on this machine which is used foe email, but more for database work on WW1 internees with limited browsing when I get bored with such work.

My own feeling is just to use a simple C struct of byte(holding length of sequence), + byte array[size less that 255]

another similar struct is used to hold overflows from addition of two digits and then added to the derived sum (any carrys noted and added ad repitum until carry array = 0 or overflows max length16 December 2020 at 22:23 #513899Another JohnS

Participant@anotherjohnsPosted by Frances IoM on 15/12/2020 21:50:02:

IanT – I'm old – Honeywell 316 + 516s – one of my home computers is in the Science museum collection (long story but one of the first UK built machines – 68008 based running a variant of Unix in the late70s)Well, my shared Virtual Reality stuff is in the Canadian Science and Tech museum; I can confirm that by the time I created this stuff, we were well and truly into Hexadecimal.

(I remember the 68008 being released – are you sure about the decade? I would have pegged it later; in the 70s, it was almost all 8 bit processors, if I remember that far back correctly) (no matter, those were the fun days; I loved the 1802 because as a kid, I could single step it and debug the hardware with only a really inexpensive analogue volt meter)

16 December 2020 at 22:45 #513902 Frances IoMParticipant@francesiom58905

Frances IoMParticipant@francesiom58905It was very early it may have been in first yrs of the 80’s – certainly by 82 at latest – what the science museum got was a working machine built from the surviving working models, but I know mine was in full working order when I swapped it with the company for a 286 system so I assumed most of the gift was my machine – I was a consultant and paid towards my machine (meant I was free to use it for other purposes (I wrote cross-assembers in C for use in teaching lab + later on a set of tools for a universal cross assembler for industrial use) – I know I was first staff member to have a home computer that was not a toy tho by current standards it was slow, I still have the CRT terminal) – I think the company (only a small startup) had the machines a few months before me – the operating system was IDRIS – a unix ‘lookalike’ by a guy who left Bell labs – Bill Plauger rings a bell but it was 40 yrs ago + my memory is not what it was

Edited By Frances IoM on 16/12/2020 22:48:37

17 December 2020 at 00:04 #513917Another JohnS

Participant@anotherjohnsPosted by Frances IoM on 16/12/2020 22:45:55:

It was very early it may have been in first yrs of the 80's – certainly by 82 at latest – what the science museum got was a working machine built from the surviving working models, but I know mine was in full working order when I swapped it with the company for a 286 system so I assumed most of the gift was my machine – I was a consultant and paid towards my machine (meant I was free to use it for other purposes (I wrote cross-assembers in C for use in teaching lab + later on a set of tools for a universal cross assembler for industrial use) – I know I was first staff member to have a home computer that was not a toy tho by current standards it was slow, I still have the CRT terminal) – I think the company (only a small startup) had the machines a few months before me – the operating system was IDRIS – a unix 'lookalike' by a guy who left Bell labs – Bill Plauger rings a bell but it was 40 yrs ago + my memory is not what it wasEdited By Frances IoM on 16/12/2020 22:48:37

Ok, that makes more sense – I left high-school (Canada) in 1978 and University in 1982, and a lot changed in that time. That's how I remember time-frames – In high school it was the 1802 and 8080, by the end of University, it was totally changed. Things went quickly back then. (I guess they do today, too).

Many years ago, I was on an overnight train (would have been autumn/winter 1982, maybe spring 1983) I went on an overnight train here in Canada (almost 9 hours long journey), almost nobody on it. My task was to further a S-100 bus-based 1802/8085 computer, wire-wrapped. The old crusty conductor (they were all crusty old conductors back then) came by and said in the typical gruff voice "What you building, a Bomb??" I said "Yeah", his answer "Good luck" and kept on walking, checking to see if anyone was likely going to give him trouble.

These days, that cocky little kid would be taken down by a SWAT team or something equivalent.

Sigh!

17 December 2020 at 10:01 #513958SillyOldDuffer

Moderator@sillyolddufferPosted by John Alexander Stewart on 16/12/2020 22:23:53:Posted by Frances IoM on 15/12/2020 21:50:02:

IanT – I'm old – Honeywell 316 + 516s – one of my home computers is in the Science museum collection (long story but one of the first UK built machines – 68008 based running a variant of Unix in the late70s)…

I remember the 68008 being released – are you sure about the decade? I would have pegged it later…

Memory's unreliable but I think you're both right. Late 70's I was a data processing department customer focussed on the future of microcomputers as office tools. The machines of the day were useful, but puny, but I saw no reason why they wouldn't eventually rule the world. (Mainframe colleagues disagreed violently!)

I was much taken with the promise of the Motorola 68000 family but they existed on paper long before hitting the street. Motorola must have had trouble making them in volume because they took years to arrive, and cost more than expected. Delayed so long that Intel grabbed the PC market by releasing the 8088 and successors, running MS-DOS and Windows. Intel's chips may have been technically inferior, but they worked and you could buy them.

The computing world would be very different had Motorola delivered the powerful 68000 family on time: we would all have gone UNIX, and Microsoft would never have existed!

Dave

17 December 2020 at 10:02 #513960 Frances IoMParticipant@francesiom58905

Frances IoMParticipant@francesiom58905Wire wrapped systems were great until you made a mistake usually discovered after you had wired another lead to the same post – then it could be 100x the time to correct the fault.

The 68008 system also used the S100 bus – probably the most mismatched scheme possible but I guess it allows use of existing boards.

Edited By Frances IoM on 17/12/2020 10:03:06

17 December 2020 at 12:17 #513981Roger Hart

Participant@rogerhart88496@SOD, liked the palindrome, put it into my list of pythons. Reminded me of back pages of Sci Am way back.

Anyone know how the CMM2 produces VGA. Is it some kind of fast loop does it use dma? Just curious, not really sure if I want to spend too much time on a VGA gadget, but I have a vga screen that has been kicking round the shop for too long.

18 December 2020 at 16:53 #514201IanT

Participant@iantPosted by Roger Hart on 17/12/2020 12:17:35:Anyone know how the CMM2 produces VGA. Is it some kind of fast loop does it use dma? Just curious, not really sure if I want to spend too much time on a VGA gadget, but I have a vga screen that has been kicking round the shop for too long.

Hi Roger,

The VGA graphics are generated by the underlying hardware but much of the 'programable' functionality is built into the BASIC engine. The CMM2 is built around a single 'Waveshare' MCU chip, which uses a Cortex-M7 32-bit RISC CPU core, running at 480Mhz and producing 1027 DMIPS. The MCU also has a double-precision FPU and Chrom-ART graphic accelerator. So just about every 'hard' feature of the CMM2 is on the MCU chip with just a few external components to enable connections to the real world.

MM Basic is closely coupled to this hardware platform, meaning that it has direct access to the 'metal' and whilst firmware upgrades have been happening pretty regularly, the MCU provides a well defined (e.g. fixed) hardware reference to base these upgrades upon. The VGA graphics are a good example, with a combination of very fast hardware combined with firmware designed to get the best out of it.

In many ways I think that's why the "Retro" guys like the CMM2 so much – it's like the 80's consoles (such as C64, Atari and Amiga etc) which were specific (e.g. stable) hardware platforms that could be explored and further 'tweeked'. There are disadvantages to devices like the RPi where both the OS and underlying hardware are constantly evolving – for some, they also present a constantly moving target…

Not really my thing but the retro-gamers are re-coding old 80's games (in Basic) and getting 30 frames per minute performance (it was originally half that). They've also recently introduced 3D graphics commands into the CMM2's Basic which are based on advanced MATH functions that I (frankly) don't understand but which apparently have all sorts of other useful applications.

The key thing is that MMB is implemented to get the best from it's platform – be that a tiny 28 pin MicroMite or the CMM2. There's nothing in-between to suck performance away. Many might see that as a major disadvantage but clearly some do not – and that is who this little box of tricks is for.

With regards VGA/HDMI – there are a lot of adapters around ranging from a fiver upwards. CMM2 owners seem to like the GANA boxes which are a bit more (about £12 on Amazon). I've got several older VGA monitors that still work very well and in fact I use an HDMI to VGA converter to connect my Pi 3B to one.

Regards,

IanT

Edited By IanT on 18/12/2020 16:56:35

27 April 2023 at 18:24 #642822Brian Smith 1

Participant@briansmith128 April 2023 at 09:11 #642898SillyOldDuffer

Moderator@sillyolddufferPosted by Brian Smith 1 on 27/04/2023 18:24:40:I'll pass this on as an interesting video

Program like a rockstar…

Wow! Thanks for that/ Trouble is, it made me feel stupid. I knew about Game of Life the time, but just thought it was just an interesting novelty. Ditto Mandlelbrot. Forty years later I find out clever chaps have taken computer science to a whole new level, whilst I was plodding along…

I'm not musical but Sonic Pi has got to be tried! (The website implies it's only available for Mac, Windows, and Raspian: not so – it's in the standard Ubuntu repository.)

Dave

28 April 2023 at 11:29 #642926 BazyleParticipant@bazyle

BazyleParticipant@bazyle"sound of code". Anyone else remember attaching an amp to chip select lines to get a feel for whether the computer was accessing the right bits of memory?

Interesting that despite the advances in computing people inventing new programming systems still resort to stilted syntax. Nowadays their simple example 'haunted bells' should, instead of several awkward code phrases, be an English sentence like "play random bell sounds". Roll on AI.

28 April 2023 at 15:59 #642947Peter G. Shaw

Participant@peterg-shaw75338I’ve just read through this thread, and very interesting it was too. But what seems to be missing is the “user experience”. It’s all very well going on about the differences between C, C+ & C++, but what about the poor old end user who has to put up with the cockups made by so-called professional programmers (and their advisors!!!). So what about the following?

Let’s start with Dijkstra’s famous dictum "It is practically impossible to teach good programming to students that have had a prior exposure to BASIC: as potential programmers they are mentally mutilated beyond hope of regeneration." Who the heck is he to make such a wide ranging nasty comment about BASIC programmers? To me it smacks of one-up-manship, or perhaps pure arrogance by suggesting that I, with a smattering of BASIC experience can never become a programmer to his standards. Ok, then what about these examples of so-called professional programming!

Example 1. I was completing an application form – doesn’t matter what for, but there was a drop down list of occupations. So, ok, I chose retired. Two questions further on, and there was a question “Employer’s Business”. Eh! What? I’m retired, hence I don’t have an employer. How difficult is it write the programme such that if my occupation was shown as “retired”, then the question about employers business would be skipped?

Example 2. A different company, not too sure this one wasn’t a jumped up Building Society, but a drop down list of occupations, so I immediately went down about 2/3 and started looking for “Retired”, only to discover that there was no order in the list, none whatsoever. Again, how difficult is it put the list into alphabetic order?

Example 3. My doctor’s this time. In order to request repeat prescriptions via the internet there is an online system. It requires certain information, including mobile ‘phone number. Now, I do have a mobile ‘phone, but it’s for MY purposes, not anyone elses. E.g. I have had a heart attack, so I always take the ‘phone with me when I’m out just in case I need to call for assistance. But, and it’s a big but, I absolutely do not want all the world being able to contact me when I’m away from home – I have a landline for that. So I did not enter the mobile ‘phone number. All was well, I was able to request repeat prescriptions ok. But then, they decided to update/improve security. Fair enough, but I couldn’t comply. Why? Because I had not, and now could not, enter a mobile ‘phone number. And so I had to use the old method of telephoning and leaving a message on the answering machine. Which of course, was quicker, easier, faster, you name it, the ‘phone beat t’internet hands down.

Example 4. My dentist this time. A nice easy method of inputting personal & medical data via t’internet. But one question was “Do I require antibiotic insurance?” Or something similar. Eh! What the h*** is that? Ok, ignore it and carry on. Then insert my DOB, something like 01 Jan 2001. (Ok, not really but it’ll do.) So that was what I entered. Failed – I had to enter Jan 01, 2001. And then it dawned on me – blasted American software! At which point I went to bed. The following day I carried on, but I couldn’t – the system locked me out, so I had to ‘phone the dental surgery to get it re-opened. Fortunately all my previously entered data was still there, but if it had not….?

As a consequence, programmers and their advisors, huh, I’ve absolutely no faith in them.

Now ok, I accept that there are cost implications, but why, oh why do we have to use software that does, or doesn’t do what it supposed to do. How difficult is it to retain at the back of your mind that this is a list, and should be in alphabetic order? Does everyone have a mobile ‘phone? (Answer here is no.) Surely American software should be checked for UK usage?

So, discuss the merits of C, C+, C++ to your heart’s content, but please, please, consider the end user!

Oh, incidently, my programming experience is limited to SC/MP (INS 8060) which taught me about machine code & buses, Z80 machine code, Sinclair Basic for the ZX80, ZX81 & Spectrum, Basic 80 (which we called Mbasic) and Tiny Basic from which I manged to rewrite a Startrek programme for the ZX81 and then the Spectrum. So not really very much. And frankly, at my age, nearly 80, I really can’t be bothered anymore.

Cheers,

Peter G. Shaw

28 April 2023 at 16:11 #642948 Frances IoMParticipant@francesiom58905

Frances IoMParticipant@francesiom58905there used to be a question often given to those programmers who made too many assumptions about the users forced to use their software ‘have you tried to eat your own dog food ?’ (bowlderised to meet today’s woke snowflakes).

28 April 2023 at 19:21 #642978SillyOldDuffer

Moderator@sillyolddufferPosted by Frances IoM on 28/04/2023 16:11:24:

there used to be a question often given to those programmers who made too many assumptions about the users forced to use their software 'have you tried to eat your own dog food ?' (bowlderised to meet today's woke snowflakes).Peter's probably aware the contempt is mutual:

- The only good user is a dead user

- Code 18 – the problem is 18" in front of the screen

- User is spelt luser (with a silent L when one is in the room).

- Wetware

- Picnic – Problem in Chair Not in Computer

As for programmers getting the blame for poorly designed systems, they're rarely responsible. They implement whatever is asked for. Or the business buys a package and configures it themselves.

Users are found to have computers full of porn, and – apparently – have no idea who loaded it and the malware. Need I go on…

Dave

PS.

Dijkstra was right about BASIC, COBOL, FORTRAN and most other early computer languages. They were all badly flawed and he deserves full credit for pointing out what was wrong. In consequence, modern computer languages are much better thought out. Modern BASIC is considerably different from the original. There is of course no connection between Dijkstra's insight into computer language design issues and the date format used by Peter's dentist!

28 April 2023 at 19:36 #642981 FulmenParticipant@fulmen

FulmenParticipant@fulmenI had a friend who programmed in straight hex. I'm not totally convinced he was human.

28 April 2023 at 20:31 #642988Peter G. Shaw

Participant@peterg-shaw75338Dave,

Unfortunately, my admittedly limited knowledge of programming etc does not insulate me from some of the things I have experienced. And some of that experience involved a specific sysop who was known for trying to prevent people who knew what they were doing, from doing it. This was when I was in fulltime employment as a telephone engineering manager.

In the instance I am thinking of, our group was given one, yes a single, access to a small mainframe computer. (Actually, I don't know what the machine should be called, but hey ho…) Now, in our group there were six of us needed access to this machine at various times. We were all 'phone engineers and we found out that this circuit was a 2 wire circuit, so the obvious answer, to us, was to use a 2 pole x 6 way switch and wire all our individual machines to this switch, and hence to the mainframe, but we weren't sure. Needless to say, the sysop was not really forthcoming so I went to see him, told him to shut up, listen, and answer our/my questions (yes, I pulled rank), eg, we understand it is a 2 wire circuit, yes or no. Is it possible to switch it between 6 pc's using a switch box. It turned out that there was no problem at all with switching the circuit, but he, the sysop would accept no responsibility for lost data due to incorrect switching. Fair enough, but why couldn't he tell us straight away? Needless to say, we wired it up ourselves and it was dead simple: "Anyone using the mainframe?" If no, then "Peter (because it was nearest to me), can you switch it please? ". "Right – done". We never had a problem.

Another problem was that I came across a colleague who was struggling with three databases – he had to shut down his computer to change to another database. Apparently he was told by his support group that it was not possible to merge the three into one! I took a copy of one of the files, and experimented, and discovered that there were two bytes which held the number of records in hex, in the usual method. (I must admit I've forgotten which way round it was, Hi-Lo or Lo-Hi). I then took a copy of another of the databases and managed to successfully merge them. As a result, my colleague and I agreed a day for the merging and in the morning, I copied all three files, merged them, and my colleague then successfully ran the new file. Of course, the original files were kept just in case.

In another instance, we had to use the electronic equivalent of punched cards, each one requiring a specific header appropriate to the exchange concerned. This was on a mainframe elsewhere in the country. I discovered that there was a batch processing system available and managed to write a batch program to create a file and insert the appropriate 1st line data. Unfortunately, I made the grave mistake of leaving my name within the code, and consequently some years later was asked about it. Having forgotten all about it, I denied all knowledge until shown the evidence.

I do understand why some of the comments above have arisen, indeed at one time I was tasked with installing one of the early Windows versions on all of our computers. I was surprised to discover how many were set up as if in America! I also know of a clerical assistant who adamantly refused to set her VDU at the recognised HS&E approved height.

I won't go any further, but as I said, I do understand how some of these comments have arisen, especially having seen the apparent lack of ability displayed by some users. It still doesn't excuse the poor programming I discussed above.

Dijkstra may well have been right, but what little I read suggested he was a high handed twerp who thought he, and he alone knew what he was going on, and to come out with that statement simply shows a deep disdain for other people and a total lack of understanding of them.

Cheers,

Peter G. Shaw

28 April 2023 at 22:33 #643003IanT

Participant@iantWell, I started this thread about the very powerful CMM2 (MMBasic based) 'Retro' computer but it seems we have had a fair amount of topic drift (once again) into the realms of what programming language is best etc etc etc – so I'll throw another two pennies on the fire (again)

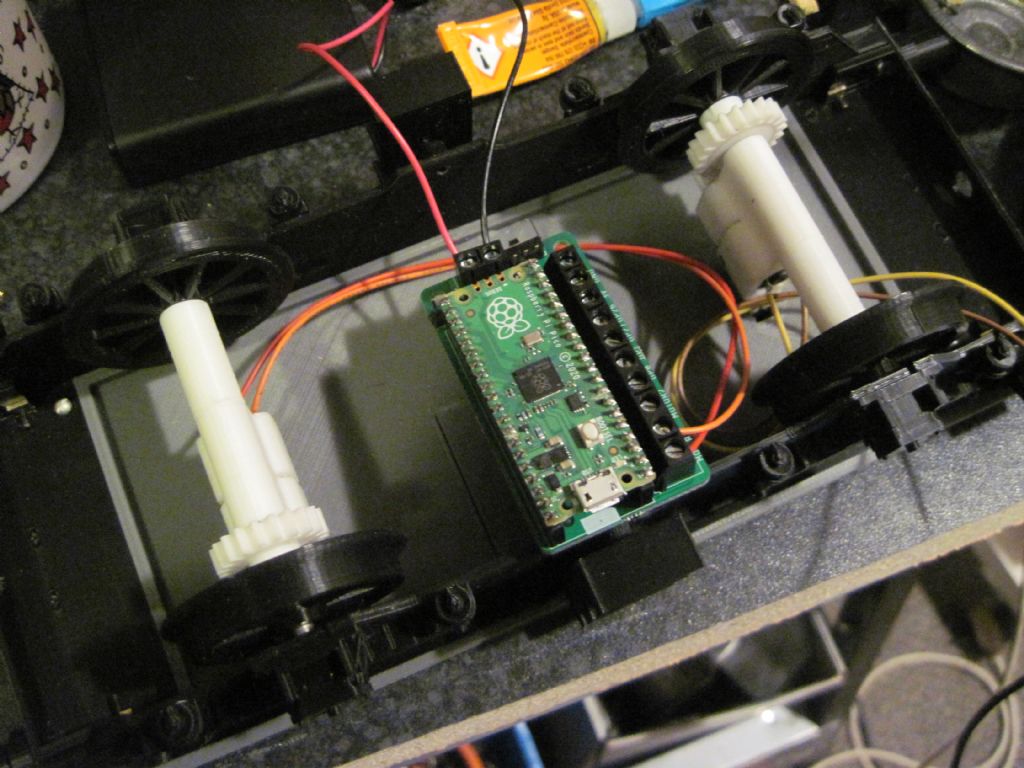

The very fast chip that powers the CMM2 is still not currently available, so my industrious fellow 'Mite' enthusiasts have been busy focusing on the RPi Pico chip. As I've mentioned before, you can build a very powerful (and self contained) EMBEDDED computer for just a few pounds with a Pico and MMBasic. Note the 'Embedded' – I'm not trying (and never will try) to build a large database server or simialr and certainly not an AI! Just simple little programmes that can turn things on and off, record data and run motors or servos etc. Very simple but useful things….

For example, recently I've been somewhat distracted with a Gauge '3' version of a L&YR Battery Electric Locomotive that you can 3D print for very little money. In my version (for eldest Granson) it has a PicoMite controlling the two DC motors (via a Kitronics Pico Controller) with an InfraRed (TV) Controller that costs in total about £15. It's only about 12-15 lines of actual code plus some commentary (I'm also looking at a version using an HC-12 link for more range).

So I'm not at all interested in building huge apps, just my simple little programmes where the PicoMite has far more power than I need in practice, is very quick & simple to programme & debug and costs just a few pounds in hardware. I don't want to learn new IDEs or languages (e.g Python). I have everything I need in one place with no external 'libraries' to worry about either!

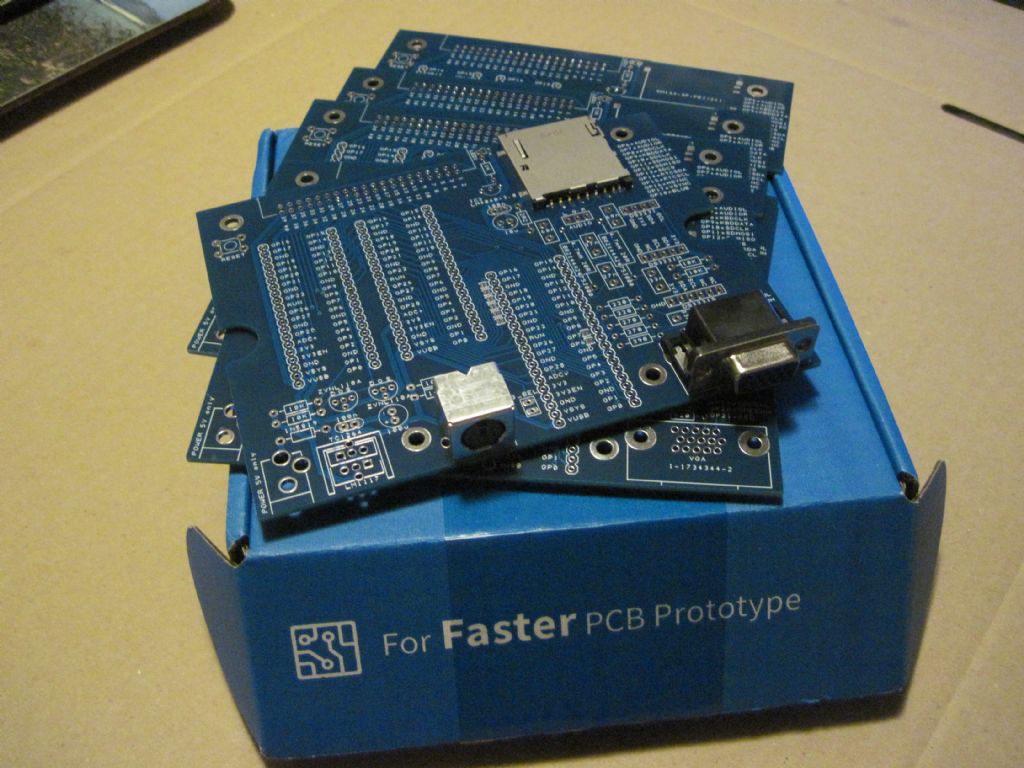

As for "Retro" (which is where this thread started) I've also now got the parts to build my PicoMite "VGAs", which use the 2nd ARM CPU (& one PIO) to generate the VGA signal, whilst leaving the first CPU free to run MMBasic at full pelt. Again, eldest Grandson is going to be the lucky recipient of one and Grandad is going to use another as a development system (for add-on 'STEM' goodies for him) as well as a Logic Analyser (for me!) when required. The other PCBs will most likely be configured as just 'simple' Picomites (e.g. no VGA) systems. The PCBs shown have two Pico pin compatible 'slots' as well as SD Card and RTC facilities (so are still very useful even without the VGA parts installed). The full PicoMite VGAs are costing about £20 each to build btw, less if no VGA parts are required. I'm also looking at using the latest (PicoW-based) version of the PicoMite (PicoWeb) to set up a monitoring system which can send me an email (when triggered) but I've got some reading to do first.

So a 'Retro' VGA computer if you want one (or alternatively a large control panel display?) as well as a simple and inexpensive embedded controller for anything else you might need. I've tried Arduino but much prefer the Mite for ease of programming and (as importantly) debugging. If you need a simple 'controller' then I'd suggest that the combination of the RPi Pico + MMBasic is very hard to beat.

Got a 'Control' problem? Download the 140+ page PicoMite manual and just take a look. Comms, I/O, File System, Graphics, Sound & Editor. There's just a huge amount of functionality packed into the PicoMite package, all for free plus £3-4 for a Pico to run it on!

You can find more details of the basic PicoMite here: PicoMite and the PicoMiteVGA here: PicoMite VGA

Regards,

IanT

19 May 2023 at 10:14 #645804Rooossone

Participant@rooossoneI am not sure how much use this would be but interesting still for the subject of retro computing. There is a guy called Ben Eater that walks you through making a retro 6502 Breadboard computer.

I'll let you all discover his content without me ruining any description of it…

He has a lot of youtube content also that goes through the implementation and theory of building a very basic RISC style CPU.

8 July 2023 at 12:38 #651300David Taylor

Participant@davidtaylor63402An interesting thread!

I wouldn't take office at Dijkstra. He knew what he was about but loved trolling.

The chip powering the CMM2 is quite a beast. Putting a quick booting BASIC on there might give some flavour of the retro experience but given it's a system-on-a-chip I feel the fun of learning and being able to understand the computer at a hardware level, like you could an 80s micro, will be missing.

Unfortunately I don't think you can get that experience now – even 8-bit retro kits don't have to cool sound and video ICs we used to have in our 6502/Z80/68000 micros and stop at a serial terminal or perhaps an FPGA VGA generator.

FWIW, I hate Python's significant indentation idea. I also take exception to the idea it's a great beginner's language. It's the most complicated language I know. I like it, and it's powerful, but it's *not* simple. It also seems to still have numerous ways to shoot yourself in the foot left over from when it was one guy's plaything. I've been using it for about 2 years full time and feel I've barely scratched the surface of what it offers.

I'm not sure I agree C is a good language for large code bases, despite the fact there are many large C code bases. Its preprocessor and simplistic include file system would not be tolerated in any modern language – most of which have tried to learn from the pain C inflicts in this regard!

8 July 2023 at 18:30 #651329Nigel Graham 2

Participant@nigelgraham2Above my grade to use, but I am interested to see these little computers and their applications; and impressed by their users' skill with building and programming the systems.

.

When I first joined what employed me till I retired, MS still used DOS, and our laboratory programmes were written on site, by the scientists themselves not some IT department. They used Hewlett-Packard BASIC, to drive various HP and Solartron electronic measuring instruments, printers and plotters.

In my lab, until they started to make the equipment computer-driven, each standard test involved lots and lots and lots of individual readings (about 6 values per iteration for some tens of iterations), writing them on a pro-forma sheet, then typing the numbers into a BBC 'Acorn' with a locally-written BASIC calculator that printed the results on a dot-matrix printer.

With one exception these programmes were all quite easy to use and worked very well – perhaps because they were written by their main users not a separate department, let alone some remote "head-office" wallah or contractor.

The exception was by its writer neglecting to provide an entry line for one, but important, instrument-setting variable (e.g. test volts or frequency), so the user had to edit the internal line instead. That is poor programming, not a criticism of BASIC (or any language).

.

When I bought my first PC, an Amstrad PCW9512, I started teaching myself its own form of BASIC, using a text-book.

I don't think there was anything intrinsically wrong with BASIC apart perhaps from being character-heavy to be readable, but its bad reputation stems as I was told at the time, from it being too easy to fill with thickets of over-nested loops and the like. That is a programming, not programme, problem though; and I gather you can write much better-constructed programmes that that in it.

.

That Amstrad's auxiliaries included the compiler for a horror called "DR- [Digital Research] LOGO" . It was claimed to be designed to help school-children learn something called "Structured Programming" – as clumsy and tautological as calling a house a "Structured Building" . In fact it was so telegrammatic and abbreviated that it was not at all intuitive or easy to read; and despite using a printed manual containing examples such as list-sorters, I failed to make any headway with it.

Around the same time a friend gave me a Sinclair ZX plus a box of its enthusiasts magazines, but that was beyond me! Its version of BASIC was hard enough, but to get the best out of it you also needed understand writing Assembly-language programming.

.

Not sure if it will run on WIN11 but I do have an old compiler for POV-Ray, which uses command-lines to draw pretty pictures. It is not a CAD application but an artistic one, still fun though!

'

I have seen C++ code and managed to see roughly what some of it does, though I not tried to learn it. Once I saw a screenful one of the scientists at work was writing. Among it was a mathematical line followed by a comment:

" ! This is the clever bit. "

Further down, more hard sums were introduced by,

" ! This bit's even cleverer! "

8 July 2023 at 19:33 #651334SillyOldDuffer

Moderator@sillyolddufferPosted by David Taylor on 08/07/2023 12:38:09:…FWIW, I hate Python's significant indentation idea. I also take exception to the idea it's a great beginner's language. It's the most complicated language I know. I like it, and it's powerful, but it's *not* simple. It also seems to still have numerous ways to shoot yourself in the foot left over from when it was one guy's plaything. I've been using it for about 2 years full time and feel I've barely scratched the surface of what it offers.

I'm not sure I agree C is a good language for large code bases, despite the fact there are many large C code bases. Its preprocessor and simplistic include file system would not be tolerated in any modern language – most of which have tried to learn from the pain C inflicts in this regard!

Oh, go on then, I'll take the bait! Python may not be simple in the 'Up Jumped Baby Bunny' sense, but I can't think of a real language that's easier to learn, especially good when taken with a small dose of Computer Science. What languages are easier to learn than Python and why? What are these other languages good and bad at?

Hating Python's indentation rule suggests a misunderstanding. All computer programs are more readable when their structure is made clear, and indenting is good for readability. Highlighting the structure is vital as soon as someone else has to read my horrible code, and the concept is so important that Python enforces it. Learning with Python avoids a common bad habit which is writing compressed code that only the author comprehends. And even he can't decode if when he comes back to the mess after a long gap.

How do you 'shoot yourself in the foot' with Python? My toes are intact!

Straightforward for individuals to write short simple programs in most languages, but doing that is a very poor test. The real trouble starts when programs get so big that teams have to manage hundreds of thousands or maybe millions of lines of code. C was designed from the outset to play in that space, which is why it has a complex pre-processor and linkage system. The C environment allows teams to work on complex debug, test, and live versions, and it can also target multiple platforms from the same code base – Window, Linux, Mac, Android and others. C is also very good for microcontrollers and other tiny computers

May not be the best of all possible languages for all time, but so far C has proved a hard act to follow, and in many ways C++ is C on steroids. The two are close relatives.

Tracking the rise and fall of computer languages over time is 'quite interesting'. Hugely popular big hitters like perl, Ruby, PHP, Scala, Rust, Objective-C, Visual Basic, COBOL, Pascal, FORTRAN and BASIC have peaked and waned. A few bombed! Despite many likeable features and having the full support of the US DoD behind it, ADA failed to get traction.

If you want a programming job in 2023, learn C#, Javascript, Java, C/C++, Python, and SQL. C/C++ and Python are predicted to be in hot demand next year, but who knows. Whatever their warts, C and C++ surely deserve the endurance prize – C was much in demand back when COBOL and FORTRAN dominated the industry.

Dave

8 July 2023 at 20:02 #651337Dave S

Participant@daves59043Posted by IanT on 28/04/2023For example, recently I've been somewhat distracted with a Gauge '3' version of a L&YR Battery Electric Locomotive that you can 3D print for very little money. In my version (for eldest Granson) it has a PicoMite controlling the two DC motors (via a Kitronics Pico Controller) with an InfraRed (TV) Controller that costs in total about £15. It's only about 12-15 lines of actual code plus some commentary (I'm also looking at a version using an HC-12 link for more range).

So I'm not at all interested in building huge apps, just my simple little programmes where the PicoMite has far more power than I need in practice, is very quick & simple to programme & debug and costs just a few pounds in hardware. I don't want to learn new IDEs or languages (e.g Python). I have everything I need in one place with no external 'libraries' to worry about either!

It’s nice to see one of my PCBs in the wild.

Dave

8 July 2023 at 21:47 #651343IanT

Participant@iantWell then Dave, I guess I am a happy customer of yours!

It's a very neat way to drive one or two small DC motors, so thank you.

Regards,

IanT

8 July 2023 at 22:06 #651344 BazyleParticipant@bazyle

BazyleParticipant@bazyleI was talking to an American University professor who mentioned that he and his department still used Fortran programming because it was simple and did the job. They used computers to do calculations not generate pretty pictures and fancy human-machine interfaces.

If Python indentation is an irritation just use Perl. Although not admitted Python is just a mix of Basic and Perl.

Basic has always been good for beginners and children because it is interpreted not complied making for a quick 'change and retest' environment which is what beginners need and children like. 99% of the reason Python has taken off is that it is interpreted so has instant availability.Weird programmer fact: The female Chinese hotel receptionist in the background in "Die Another Day" when Bond enters soaking wet was our Perl programmer who did some work as an Extra at Elstree Studios.

Edited By Bazyle on 08/07/2023 22:21:43

9 July 2023 at 01:04 #651355David Taylor

Participant@davidtaylor63402I'll start by emphasising I am a fan of Python, despite it having a lot of features I don't like, and I think it's an excellent language for modern programming idioms. I've been programming full time for money since I was 16, 36 years ago. I've written programs in C for Windows 3, devs tools in VB6(!), COBOL on various mini computers, lots of C/C++ on *NIX systems, a lot of Java, a lot of server-side stuff, distributed stuff, remote sensing, and embedded systems. I am hopeless at web programming and hate it with a passion. I'm nowhere near as good as I used to think I was, but I don't think I'm the worst programmer around. My first language was BASIC on the C64 and I don't think it hurt me

I understand structured code, but forcing structure at that level just seems petty and not something a language should be concerned with. If the code 'in the large' is badly structured, some local indenting rules are not going to help. It can be confusing for IDEs because if you're pasting code at the end of an indented block the IDE can't tell if the code should be part of the indented block or part of the next piece of code at the next level out. It also means you can have code in a block that you didn't mean to be there. Delimiters at the start and end of code blocks avoid these problems. It's the same reason I *always* use curly braces in C/C++ code, even for single line blocks – then you don't end up with problems of code being in the wrong block.

Some of the problems with Python:

- You don't need a 'main' but if you don't have one you might get unexpected results. So they should have just required it. It's nice a beginner can write a one line program to print something on the console but if that causes grief in the majority of cases then it's a dumb idea. Eg, when importing a module, any code outside of a function in that file is executed. I guess you can argue this is good for initialisation code but it just seems wrong to me – it's like a side-effect.

- Default argument values that are lists or maps are initialised *once*, when the function is first parsed, rather than taking that value every time the function is called. That's insane and completely unexpected behaviour IMO

- No real threading, and now, worse, the modern curse of 'async' code. As with JavaScript this only came about because the original implementation was as a toy language/quick hack with no thought of support for threads. And now programmers are starting to think this is normal and it's infecting languages that *do* have real threads. If you want a tight select() loop for I/O like in C, then just give support for that.

- Not requiring types for function arguments and returns. Argument names do *not* sufficiently describe their types, and the language is perfectly happy for you to give *no* indication of what a function returns, if anything. There is *optional* typing but it should be a thing you opt out of, not in to. Note that annotating types for functions the way it is done in Python does not force those types, it just tells the caller what to expect and what will work.

However, Python's strengths outweigh all of that. It has some awesome and powerful features these days, which as I said, I'm only just getting to grips with.

I completely disagree that Python is a child of BASIC and perl – I don't see any inherited traits from either! And no, I will not 'just use perl'. I have never willingly written a perl program, and only debugged them when there was no other choice

Finally, I hate C++! I think it started badly and only ever gets worse. Java was a much better effort at a better C, except it's not much good for low level coding.

So people can more easily tell me I'm wrong, my favourite languages are C, Python, and Java. I think C# has a lot going for it too and would probably choose it over Java these days if I knew it better. Swift looks pretty good too.

I would replace C in a second with a better low level language. Rust, zig, and mojo are all languages I'd be happy to take on if I was paid to learn and use them.

C was not designed for large code bases, it was designed more like a very fancy macro-assembler. It has innumerable faults that have cost untold amounts of money. It's linkage system is painful and you get two choices – the symbol is global (even if not accessible, it still causes name clashes) or it's private to that compilation unit. The preprocessor is not a feature, it's a bug and is increasingly seen as such. C is actually a pretty nasty language where you need to be exceedingly careful not to stuff up but sometimes it's the only thing that will do the job. Luckily, nearly 50 years of C has exposed it's faults and allows newer languages to try to avoid them. Rust is catching up quickly, even for embedded programming. I don't know what caused the great C explosion in the 80s, or why something more suited to high level programming didn't take off instead. But for code heads like me, C still has a weird attraction.

For the recreational coders out there, the annual Advent of Code challenge is a great way to get into a new language. We used it at work to all get into something different and as a good morning conversation topic.

-

AuthorPosts

- Please log in to reply to this topic. Registering is free and easy using the links on the menu at the top of this page.

Latest Replies

Home › Forums › Related Hobbies including Vehicle Restoration › Topics

-

- Topic

- Voices

- Posts

- Last Post

-

-

Starrett and other tool manufacturer wood boxes

Started by:

Ian Owen NZ in: Workshop Tools and Tooling

- 1

- 1

-

6 July 2025 at 02:06

Ian Owen NZ

-

Bentley BR2 Rotary Aero Engine

Started by:

notlobgp14 in: Miscellaneous models

- 4

- 8

-

5 July 2025 at 22:46

notlobgp14

-

New member

Started by:

nige1 in: Introduce Yourself – New members start here!

- 3

- 6

-

5 July 2025 at 22:41

notlobgp14

-

M type top slide conversion??

Started by:

jimmyjaffa in: Beginners questions

- 7

- 12

-

5 July 2025 at 22:10

jimmyjaffa

-

Starrett micrometer.

Started by:

Graeme Seed in: Workshop Tools and Tooling

- 7

- 14

-

5 July 2025 at 21:30

peak4

peak4

-

Twin Engineering’s heavy mill/drill quill removal

Started by:

Martin of Wick in: Manual machine tools

- 11

- 25

-

5 July 2025 at 21:10

Martin of Wick

-

Dial test indicator vs Dial indicator

Started by:

martian in: Workshop Tools and Tooling

- 17

- 25

-

5 July 2025 at 20:19

martian

-

Speed camera

1

2

3

Started by:

David George 1

in: The Tea Room

David George 1

in: The Tea Room

- 23

- 60

-

5 July 2025 at 19:40

John Haine

-

Sanjay’s Banjo Engine

Started by:

JasonB

in: Stationary engines

JasonB

in: Stationary engines

- 3

- 5

-

5 July 2025 at 19:14

JasonB

JasonB

-

Any ideas how to repair this?

Started by:

Ian Parkin in: Related Hobbies including Vehicle Restoration

- 11

- 14

-

5 July 2025 at 15:36

Pete Rimmer

-

Chucking Money Away!

Started by:

Chris Crew in: The Tea Room

- 6

- 8

-

5 July 2025 at 12:55

Chris Crew

-

2 Machine lights

Started by:

modeng2000 in: Workshop Tools and Tooling

- 2

- 8

-

5 July 2025 at 11:58

Dalboy

-

Boxford lathe & vertical mill VFD conversion help with start stop

Started by:

Andrew Schofield in: Beginners questions

- 8

- 10

-

5 July 2025 at 11:39

Clive Brown 1

-

1965 Colchester Chipmaster

Started by:

andyplant in: Introduce Yourself – New members start here!

- 6

- 10

-

5 July 2025 at 11:09

Rod Renshaw

-

What Did You Do Today 2025

1

2

…

6

7

Started by:

JasonB

in: The Tea Room

JasonB

in: The Tea Room

- 33

- 175

-

5 July 2025 at 09:26

Nick Wheeler

-

Amadeal AMABL210E Review – Any Requests?

1

2

Started by:

JasonB

in: Model Engineer & Workshop

JasonB

in: Model Engineer & Workshop

- 16

- 39

-

5 July 2025 at 05:49

Diogenes

-

ML10 backgear

Started by:

alexander1 in: Manual machine tools

- 3

- 5

-

5 July 2025 at 00:11

Bazyle

Bazyle

-

The Perpetual Demise of the Model engineer

Started by:

Luker in: Model engineering club news

- 13

- 25

-

4 July 2025 at 17:06

JasonB

JasonB

-

Colchester Chipmaster Clutch question

Started by:

Peter_H in: Manual machine tools

- 3

- 5

-

4 July 2025 at 16:44

notlobgp14

-

Advice moving 3x machines

Started by:

choochoo_baloo in: Workshop Techniques

- 5

- 5

-

4 July 2025 at 15:46

Bazyle

Bazyle

-

Black plastic to replicate Bakelite

Started by:

Craig Brown in: Materials

- 8

- 9

-

4 July 2025 at 15:35

nj111

nj111

-

Firth Valve Gear

Started by:

Andy Stopford in: Traction engines

- 10

- 22

-

4 July 2025 at 14:42

duncan webster 1

-

A Persistent Scam

Started by:

Chris Crew in: The Tea Room

- 5

- 5

-

4 July 2025 at 14:23

Speedy Builder5

-

Hemmingway rotary broaching kit

Started by:

YouraT in: Workshop Tools and Tooling

- 4

- 4

-

4 July 2025 at 09:02

jimmy b

jimmy b

-

Collet closer identification.

Started by:

vic newey

in: Workshop Tools and Tooling

vic newey

in: Workshop Tools and Tooling

- 10

- 15

-

3 July 2025 at 22:36

richlb

-

Starrett and other tool manufacturer wood boxes

-

Latest Issue

Newsletter Sign-up

Latest Replies