Posted by david bennett 8 on 15/08/2023 00:30:56:

Posted by duncan webster on 14/08/2023 13:34:21:

Posted by david bennett 8 on 14/08/2023 12:55:29:

Posted by John Haine on 14/08/2023 12:32:22:

Posted by SillyOldDuffer on 14/08/2023 11:40

Arguable that Fedchenko's transistor circuit is a very simple computer, but all it does is keep the pendulum swinging, it doesn't monitor or control amplitude and it tells you nothing about period.

Dave, don't get me wrong . I'm not against computers, but I wonder whether the sometimes spurious results thrown up might over say a 1-year run, ruin your clocks performance. I doubt that Fedchenko's simple single transistor circuit would suffer from this too much. …

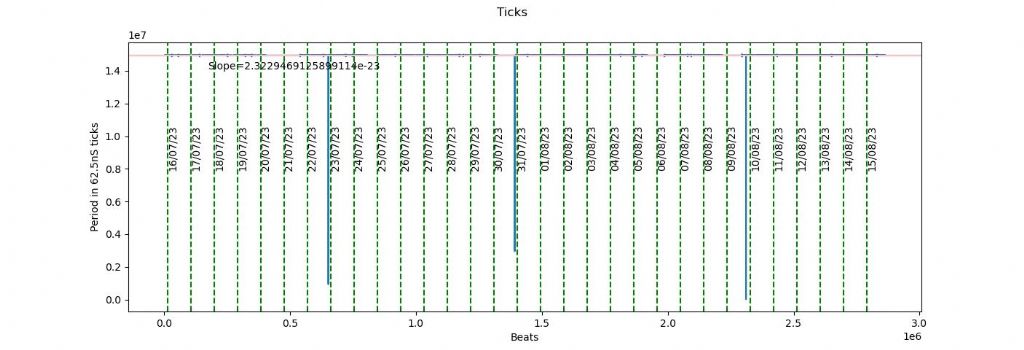

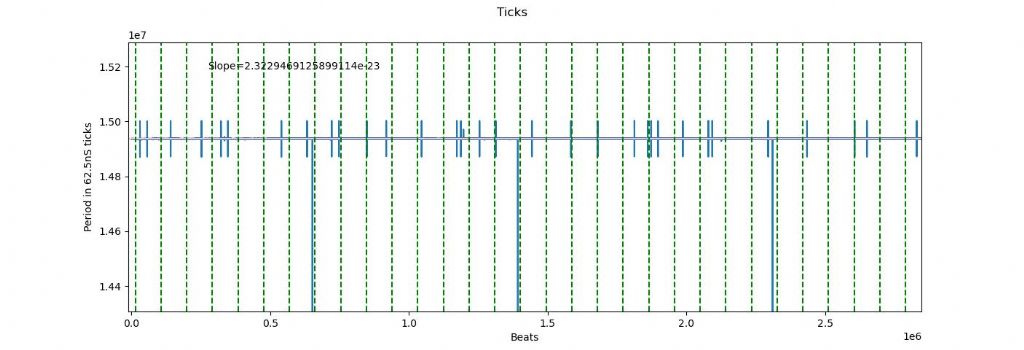

Spurious sensor readings are causing my pronblems rather than the computer as can be seen in this graph, where 3 giant errors cause the clock to jump:

I don't know what caused them. May be a coincidence they all occur just before midnight.

Zooming in on the data shows thirty odd more much smaller anomalies:

Although the smaller glitches balance out, they shouldn't be there. Best thing would be to find and fix the cause, but I've thought of filtering them out in software. Hard to find the cause of only 40 apparently random errors in 2.9 million readings, so it's tempting!

I can't get away from using a computer because I'm experimenting with a statistical clock. In this experiment the actual pendulum period doesn't matter provided all swings are normally distributed. Instead, detecting a swing causes the microcontroller to calculate and count what the period should be after compensating for temperature and pressure. The period calculating formula is derived from statistical analysis of a long pendulum run during which the pendulum is compared with a much better clock. (Bog standard NTP at present but I can also use GPS).

Despite progress, I'm not getting the precision I long for!

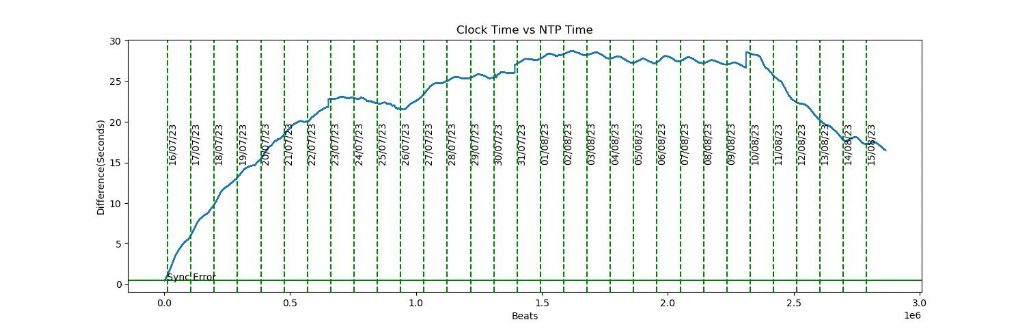

On the graph above the straight green line shows what the clock should be doing, i.e tracking NTP within about 100mS. The blue line shows my clock is wandering, and other graphs show the rate changes are not temperature or pressure related. The wandering blue line is bad news – straight lines are easy to compensate, random wandering is a horrible mystery. Roughly:

- Gained 23 seconds in 7 days, then

- Lost 2 seconds in 2 days, then

- Gained 8 seconds in 9 days, then

- Lost 1 second in 8 days, then

- Gained 1 second due to a sensor error, then

- Lost 14 seconds in 6 days.

After a month, never been more than 29 seconds wrong, and is currently only 17 seconds fast, not awful except I'm hoping for milliseconds per year, not half a minute per month! In theory my clock is working brilliantly: in practice it's below average. Unfortunately the experiment requires I persist with the computer!

Dave

Edited By SillyOldDuffer on 15/08/2023 21:00:31

John Haine.