Posted by david bennett 8 on 14/07/2023 21:52:13:

Has anyone added up the irreducible timing errors for every line of code read ( and acted upon ) by arduino/pi devices in this context ? Or at least an average ?

…

I've dabbled a bit, and taken high-level timings to identify slow code, also measured the variance of the USB link. (Serial interface times vary much more than Arduino code.)

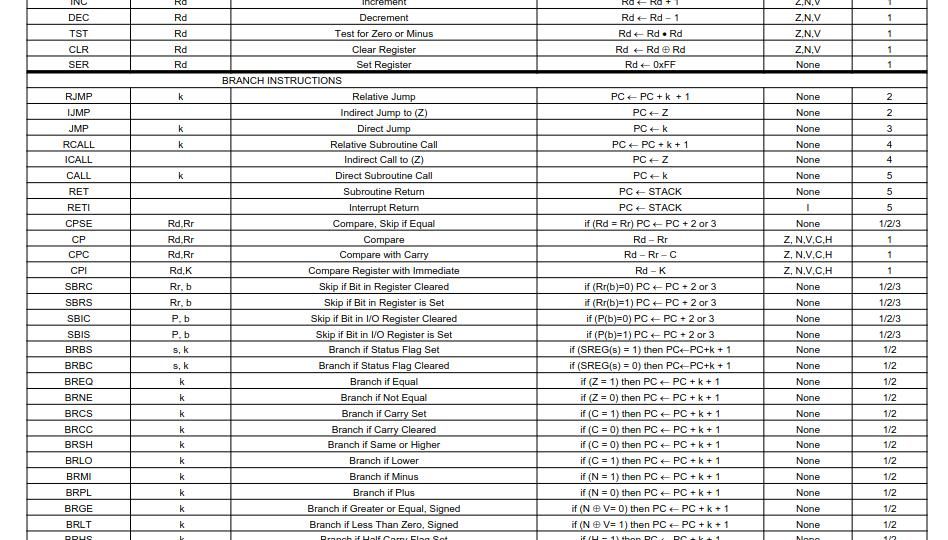

The datasheets of most microcontrollers give how many clock cycles each machine instruction takes. Following clipped example is from Section 29, Page 292 of the ATMega16U2 datasheet, a CPU is used by many Arduinos.

The last column shows how many clock cycles a particular instruction takes. On this type of processor most instructions execute in only one cycle. As the Arduino is clocked by a 16MHz oscillator, they take 62.5nS each. Other instructions take 2 or more cycles, and their times are multiples of 62.5nS. Though best to write a program to do the analysis and counting, these instructions are easy to sum and time predict, but read on…

However, other instructions, such as the Branch group, take 1 or 2 cycles depending on a TRUE or FALSE condition. Their timings depend on the input and cannot be predicted exactly. They average somewhere between 62.5 and 125nS depending on the input.

May have noticed in the table that subroutine calls are expensive, costing 5 cycles each (312.5nS). They often occur in conjunction with Branch calls: if TRUE then CALL subroutine, making the average time cost vary even more depending on the input.

Input can be data or events, often a mix. Events are often handled by Interrupts, which also make it hard to predict exact execution times. Interrupts are hardware driven. When one occurs, the processor is stopped mid-flight and it saves current state to memory. Then a special subroutine is executed to manage the event. That done, normal operation resumes: after resetting the previously saved state, the processor carries on. Difficult to predict how long any section of code will take to execute when random events can interrupt and delay it.

More timing uncertainty when the microcontroller exchanges data with another machine. For efficiency reasons, it's usual to send data in blocks rather single bytes, and the other end may not be ready. Time variations caused by Input-Output are typically large – tens of milliseconds rather than nano-seconds.

The language, compiler and compiler settings add more uncertainty. 'C' is rather close to the hardware and tends to produce efficient machine code without the programmer doing anything special, hurrah. However, the Arduino compiler (g++) is set to produce space efficient rather than speed efficient code, not so good for time critical work. It can be changed. There are a bunch of optimisations that can be adjusted case by case but the programmer has to understand how appropriate these are to his problem. The machine code produced by the compiler varies, so all timings have checked after changes.

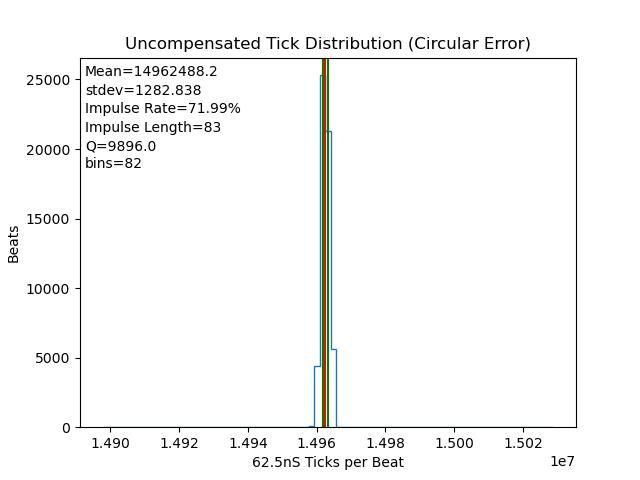

In practice, most microcontroller code isn't time critical, and performance can be measured 'on average'. Simple code is usually predictable, and the error caused by 1 second pendulum events is small. Not worth getting into deep analysis and micro-efficiencies unless an application really is time critical. My experimental clock pays moderate attention to timing – mainly ensuring the code doesn't dither.

Truly time-critical code is difficult to write and debug. There are techniques and tools, but they're all advanced. A fair bit can be done with the Arduino compiler by going backstage and altering the configuration. Also, code can be timed accurately by flipping pins start and end of critical sections and measuring with an oscilloscope. Professionally, there are profilers and other tools that help.

Dave

John Haine.